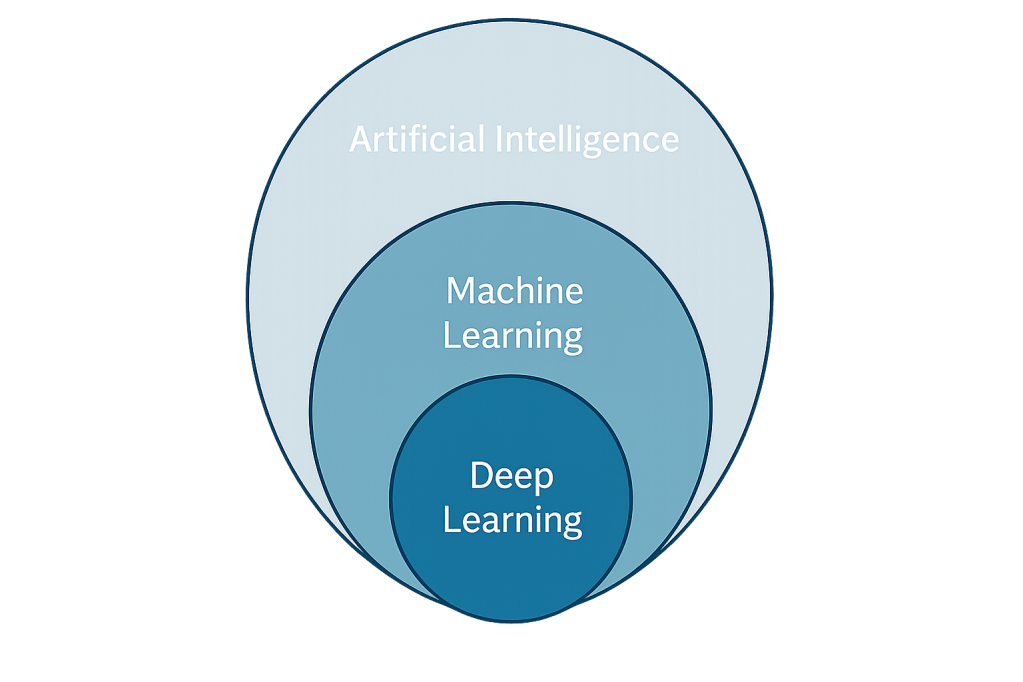

Although closely related, these three terms are not interchangeable. Artificial Intelligence is an overarching field that encompasses both Machine Learning and Deep Learning.

Artificial Intelligence

Artificial Intelligence (AI) is a broad, multidisciplinary field dedicated to creating systems that can perform tasks typically associated with human intelligence. These tasks include decision-making, learning (the acquisition of knowledge through experience), reasoning (the application of knowledge to draw conclusions, make inferences, and solve problems), pattern recognition, and language understanding. The ultimate goal of AI development extends beyond simply mimicking human capabilities in these areas—it aims to surpass them.

In 1950, Alan Turing proposed a test: if a machine could hold a conversation with a human without the human realizing it was a machine, that would serve as proof that the machine could indeed think. This paradigm became the philosophical and practical foundation for AI research. The term “Artificial Intelligence” was first coined in 1956 at the Dartmouth Conference, an event that sparked a period of enthusiasm and optimism in the field. During this initial phase, symbolic—or rule-based—AI was the dominant approach. However, due to limited funding and the computational power available in the 1970s, AI experienced a period of stagnation without major developments.

The 1980s ushered in a renewed wave of enthusiasm within the field. Symbolic AI was on the rise, and expert systems were a key application of this approach. Furthermore, efforts to transition AI from academic research into practical business solutions began to yield results, culminating in significant adoption: reportedly, two-thirds of Fortune 500 companies applied expert system technology in their daily operations during this decade. Two notable systems from that time include MYCIN (for medical diagnosis) and DENDRAL (for chemical structure elucidation). The optimism of that time was soon tempered by a set of limitations, such as data acquisition challenges and the systems’ difficulties in learning and adapting. That led to another period of stagnation in the field.

The advancements in computational power and data availability at the beginning of the 2000s revived the enthusiasm of researchers in the realm of AI. Moreover, the rise of Machine Learning and Deep Learning reinvigorated the AI community.

Machine Learning

Artificial Intelligence was initially reliant on human-coded rules and if-then statements, an approach known as symbolic AI. While these systems could tackle certain reasoning tasks, they weren’t truly learning; instead, they merely followed a set of rules provided by a programmer. This approach started to change with the advent of Machine Learning—a subfield of AI where computers learn from data rather than explicit programming.

This shift meant that systems became more flexible, capable of generalizing and adapting, and able to improve automatically. Machine Learning can be subdivided into three main types:

- Supervised Learning: requires a target variable and labeled data to learn.

- Unsupervised Learning: identifies patterns in unlabeled data.

- Reinforcement Learning: learns through trial and error, receiving rewards or penalties.

Deep Learning

Artificial Intelligence aims not only to solve problems associated with human thinking, but also draws inspiration from human cognition to develop algorithms. Neural networks—a Machine Learning model inspired by the human brain—are composed of interconnected nodes (akin to neurons) organized into layers. While traditional neural networks have one or two layers, Deep Learning networks can have dozens or even hundreds. This increase in layers grants them enormous capabilities in understanding complex and non-linear patterns in data, though it often comes at the cost of interpretability.

In traditional Machine Learning, researchers are responsible for feature selection and handling and can sometimes achieve good results with limited data. In contrast, Deep Learning networks automatically extract features but require significantly more data, leading to higher computational costs. Consequently, this approach is typically recommended for highly complex tasks where traditional Machine Learning models would perform poorly.

Some applications of Deep Learning include:

- Computer vision (e.g., face recognition, cancer detection)

- Natural language processing (e.g., chatbots, language translation)

- Speech processing (e.g., voice assistants, speech-to-text systems)

- Recommendation systems

To summarize

Remember that Artificial Intelligence is the broad goal of creating intelligent machines. Machine Learning is one way to achieve that goal—by enabling computers to learn from data. And Deep Learning is a powerful type of Machine Learning that uses complex neural networks.

While related, each term describes a different level of the technology and its capabilities.

Leave a comment